AI Could Be Great for Medical Research, But...

Whether for a good cause or evil intent, the genie from Aladdin is known for fulfilling various wishes of its master. The animation depicts the genie’s capabilities of granting wishes and the positive and negative outcomes that result from the user’s intent. In the real world, during war times, the newspaper was known for being used as a weapon to fight opposing parties and as a source of informing and communicating with people to help them stay aware of happening circumstances. Similarly, knowledge can serve various purposes depending on the intent of its possessor. Artificial Intelligence (AI) is no different either. Its potential explored thus far can be compared to the level of oceans explored across the world. Its full potential is yet to be unlocked, and the minimal that is explored is already making remarkable progress across industries.

Whether for a good cause or evil intent, the genie from Aladdin is known for fulfilling various wishes of its master. The animation depicts the genie’s capabilities of granting wishes and the positive and negative outcomes that result from the user’s intent. In the real world, during war times, the newspaper was known for being used as a weapon to fight opposing parties and as a source of informing and communicating with people to help them stay aware of happening circumstances. Similarly, knowledge can serve various purposes depending on the intent of its possessor. Artificial Intelligence (AI) is no different either. Its potential explored thus far can be compared to the level of oceans explored across the world. Its full potential is yet to be unlocked, and the minimal that is explored is already making remarkable progress across industries.

As for the healthcare sector, the medical research field, in particular, is a route that AI can promise to revolutionize majorly in two ways. One is optimizing research, and the other is being capable of researching beyond what humans can uncover. In simple terms, AI does not have many restrictions as humans do, starting with human researchers being susceptible to illnesses and other ailments. Hence, AI can seep deep into datasets faster without any breaks or being struck by sudden illnesses or fatigue.

It’s believed that AI can bring clarity to areas where human clinicians find difficulty in identifying tuberculosis on chest radiographs.

It can also make the research process more cost-efficient.

A Taiwanese computer scientist, businessman, and author Kai-Fu Lee recently opined about the future of AI. He speaks about machine learning algorithms, another branch under AI, that help to focus on matters that AI cannot do. Kai-Fu believes that machine learning algorithms will likely take AI to the level of greater understanding of how human cognition works. If it keeps up, it will probably lead to more breaks and even enter the realm of superintelligence.

However, more work is required to perfect the art of this technology. Additionally, there is the matter of ethics in AI at least to answer questions about issues related to data collection and intellectual property and beyond.

Need for Human Surveillance

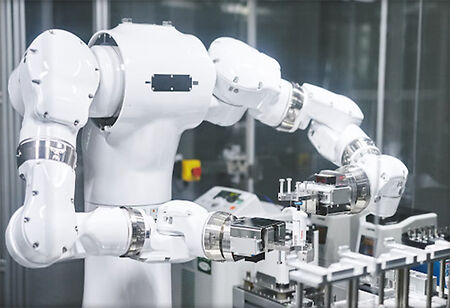

Let's take the case of surgery robots that are said to operate logically rather than empathetically. Although AI is advanced, health practitioners are required to observe important behavioral changes that can help diagnose or prevent medical complications. This is due to the fact that AI requires human input and review to leverage the data effectively.

Missing Out on Social Standards

Sometimes, a patient's requirements go beyond their current physical ailments. Appropriate advice for specific patients can depend on social, economic, and historical circumstances. For instance, based on a certain diagnosis, an AI system might be able to assign a patient to a particular care facility. However, this approach might not take the patient's financial limitations or other specific preferences into consideration.

When an AI system is used, privacy also becomes a problem. When it comes to gathering and utilizing data, companies like Amazon are given complete freedom. On the other hand, hospitals may experience certain difficulties when attempting to channel data from Apple mobile devices, for example. The ability of AI to support medical procedures may be constrained by several societal and governmental constraints.

Misinterpretation Possibilities

Medical AI heavily relies on diagnosis information gleaned from millions of instances that have been cataloged. Misdiagnosis is possible when there is limited information on certain illnesses, demographics, or environmental variables. When recommending a specific treatment, this factor becomes extremely crucial.

No matter the kind of system, some piece of the data will tend to be missed. When it comes to prescriptions, details about specific populations and treatment reactions may be missing. This phenomenon may make it difficult to diagnose and treat individuals who fit a particular demography.

To account for data limitations, AI is continually changing and developing. It's crucial to remember that certain groups can still be left out of the domain knowledge that already exists.

Prone to Cyberattacks

AI systems are vulnerable to security threats since AI is typically dependent on data networks. With the advent of offensive AI, better cyber security will be necessary to guarantee the long-term viability of the technology. Most of the security sector decision-makers believe hostile AI is a growing concern, according to Forrester Consulting, a research and advisory firm.

Cyberattacks will use AI to become wiser with each success and failure, making them more difficult to forecast and avoid, just as AI uses data to make systems smarter and more accurate. The attacks will be considerably harder to stop once serious threats manage to outwit security measures.

Regulatory Compliance

Regulators' approval of AI technology can be difficult to obtain. All algorithms used in the healthcare industry in the European Economic Area (EEA) are required to apply for CE marking, a certification mark indicating compliance with health, safety, and environmental protection criteria. More precisely, the item must adhere to the standards established by the European Commission's EU Medical Device Regulation 2017/745 (MDR).

Product owners must specify the algorithm's ‘Intended Use’ carefully and precisely, as this Data Science guide to AI legislation emphasizes. Failing to do so can lead to misunderstandings regarding the required level of transparency.

Transparency

Both with regards to firm regulations and front-line health provision, transparency is a major issue pinching AI developers till date. Certain algorithms recommend certain procedures and treatments to doctors, which makes it important for doctors to be provided with transparency to understand and explain why a recommendation was made.

Could AI Take Over Doctors and Clinicians?

In the near future, we might see AI fully involved in working with various aspects of clinical research, from drug discovery diagnosis to treatment. With the increasing involvement of automation in most areas of medical research, it may be possible that robots could take over the healthcare sector. Despite these concerns about AI replacing doctors and clinicians, it may not be the case. Why? Looking at what has been happening, AI is only helping doctors. The accurate information AI provides helps ease doctors’ valuable time and make informed decisions. However, it is important to consider that new technologies should be able to blend with existing workflows while gaining regulatory approval, of course. Rather than moving into an era of machines dominating humanity, we can observe that early AI applications have complimented and optimized the vital work of human researchers and clinicians.